-

这两天看到360 doc个人图书馆无偿转让的消息,心里一阵唏嘘。对很多80后来说,这个陪伴了大家十几年甚至二十年的产品,早已不只是一个简单的网络图书馆——它是互联网早期内容沉淀的重要载体。能坚持运营二十多年,背后团队的坚守与热爱,值得每一个从业者由衷佩服。但敬意之外,更想和大家聊聊从这个产品引发的更多思考。现在很多企业都会找我们咨询IPD的落地。我们在IPD咨询中,第一步便是引导大家培养“投资思维”。IPD强调的“投资思维”,本质上是把产品研发当成一场需要回报的长期投资,而不是单纯的兴趣创作。就像我们普通人投资股票、基金,会提前算好成本、预期收益和风险一样,做产品也该如此——从立项之初,就要想清楚“这款产品解决什么问题”“用户愿意为什么付费”“如何覆盖研发和运营成本”“长期盈利的逻辑是什么”。除了360 doc个人图书馆,还有博客园,现在市场上很多这类产品,起初都带着满满的情怀上路,却在商业化的过程中没找到合适的路径,明明有庞大的用户群体,却无法做到商业变现,最终困于生存问题。抛开个人图书馆来看,产品人的用爱发电,或许能让产品交付上线,能让产品活下来,但很难让产品真正活好。只有做好明确清醒的战略规划,尤其是商业化模式的布局,才能让产品的价值延续。一、360 doc的困境我们再来看360 doc的困境,其实很有代表性:它手握8000万用户、11亿篇文章的海量资源,却始终未能找到更好的商业化路径,将流量转化为可观收益。除了它之外,还有很多类似的产品在商业化中处处受限:做广告投放,优质品牌担心与低质内容关联影响形象,广告溢价上不去;推会员付费,用户找不到足够有吸引力的独家优质内容,付费意愿低迷;谈商业合作,版权归属模糊的问题让合作方望而却步。最终,巨大的用户量没有成为盈利的关键,反而因服务器维护、内容审核等成本,变成了沉重的运营负担。像360 doc这类网站,近年来的流量营收主要是依赖互联网广告、搜索引擎。一旦搜索引擎算法调整,流量就会大幅波动,这种营收的稳定性不高。单腿走路的盈利模式,也让产品在市场变化中没有太大的抗风险能力。再加上AI工具的普及更是雪上加霜。过去,用户依赖360 doc收藏、检索文章,本质是为了高效获取和管理知识;但如今,AI工具能直接整合海量信息,快速提炼核心观点,甚至根据需求生成定制化内容——原本需要在平台上繁琐检索、整理的内容,现在能免费且便捷地获取。这直接冲击了360 doc的核心价值,让本就艰难的商业化更是难以为继。二、一定要商业化吗?可能有人会说:“为什么一定要商业化?保持纯粹不好吗?”其实我很特别理解这种想法,毕竟谁都希望自己喜欢的产品能远离铜臭味。但现实是,任何产品的运营都离不开成本——服务器的维护、团队的薪酬、功能的迭代,每一项都需要真金白银的投入。就像开源软件领域的共识:“免费的东西往往最昂贵,因为它会因为资金枯竭而停止更新。”360 doc能坚持二十多年,已经是极限;而更多类似的产品,往往在运营三五年后就因为资金链断裂,悄无声息地退出了市场。这里想澄清一个误区:商业化不是对热爱的背叛,而是对产品生命力的守护。真正的商业化,从来不是简单地贴广告、收费用,而是找到“用户价值”与“商业价值”的平衡点——既不牺牲用户体验强行变现,也不因为回避商业化而让产品失去迭代的动力。就像之前看到的一篇《人间清醒,开源一定要做商业化》文章所说,开源不等于免费,优秀的开源产品都会通过付费订阅、定制服务、技术支持等方式实现盈利,唯有这样,才能持续投入资源优化产品,最终惠及更多用户。回到360 doc这个产品上,其实它并非没有商业化的可能性。如果早一点基于IPD的投资思维做规划,或许能走出不一样的路:比如先梳理内容版权,打造优质独家内容库,再推出分级会员服务,用无广告、大容量、精准检索等权益吸引用户付费;针对企业用户,开发团队知识库、文档协作等定制化方案,拓展B端盈利场景;甚至可以借助AI技术,将存量文章转化为结构化知识,提供智能问答、专题梳理等增值服务,重塑核心竞争力……在产品研发中,IPD的投资思维想要规避的问题是:产品研发不能“走一步看一步”,更不能“先做出来再说”。一个成熟的产品战略规划,应该包含三个核心部分:一是用户价值定位,明确产品解决的核心痛点;二是技术研发规划,确保产品的稳定性和可扩展性;三是商业化模式设计,提前布局变现路径。这三者相辅相成,缺一不可。就像我们做投资,不会只看项目的前景而忽略盈利模式,做产品同样如此——脱离了商业化的产品,就像没有油的汽车,哪怕设计再精美,最终也只能停在原地。当然,我并不是说所有产品从一开始就要急功近利地追求盈利。对很多初创产品来说,前期重点积累用户、验证需求是必要的,但这并不意味着要完全回避商业化的思考。恰恰相反,在产品迭代的每一个阶段,都应该围绕“如何实现可持续发展”做铺垫。比如在用户增长阶段,就可以通过用户调研了解大家对付费功能的接受度;在功能优化阶段,优先开发那些既能提升用户体验、又能为后续商业化铺路的功能;在流量稳定后,及时搭建多元化营收结构,降低对单一渠道的依赖。聊到这里,可能有人会觉得“商业化太难了”,尤其是对于那些带有情怀属性的产品,稍微动变现的念头就会被用户质疑。但实际上,用户反感的不是商业化本身,而是粗暴的商业化——比如不分场合的弹窗广告、强制付费才能使用核心功能、为了变现随意修改产品定位,甚至流氓似的为了敛财,把文章锁定为VIP等等。只要变现方式是合理的、是能为用户带来额外价值的,大多数用户都会愿意为优质产品买单。开源软件商业化的路上就有很多成功案例,像红帽软件、MongoDB、GitLab,还有国内的禅道项目管理软件等等。用“开源核心+商业增值”的模式,既实现了盈利,又能持续迭代产品,形成用户与团队的双赢。最后,再回到360 doc无偿转让这件事上。这也给所有产品人敲响了警钟:在竞争激烈、技术迭代加速的市场环境中,用爱发电只能是阶段性的坚持,唯有建立清晰的商业化模式,搭建多元化的营收结构,才能让产品的价值长久延续。对用户来说,一个能持续迭代、不断优化的“不完美但鲜活”的产品,远比一个因资金枯竭而停滞不前的“完美但死寂”的产品更有价值。希望未来有更多产品能在情怀与商业之间找到平衡,既能守住初心,也能走得更远!

-

在T级DDoS攻击成为常态的今天,CC攻击的复杂性和隐蔽性使其成为Web应用安全的头号威胁。华为云“高防+WAF”的深度联动为开发者提供了从网络层到应用层的全栈防护体系。CC攻击的新特征与防护挑战近年来,随着DDoS防护能力的普遍提升,攻击者的焦点正转向更难以防御的CC攻击。CC攻击(Challenge Collapsar)本质上是一种针对应用层的恶意流量攻击,攻击者通过控制僵尸网络或代理服务器集群,模拟海量“正常用户”行为向目标服务器发起请求。与传统的暴力型DDoS攻击不同,CC攻击的杀伤力在于其高度伪装性——每个请求都符合HTTP协议规范,单个会话消耗资源极小,但聚合效应却能快速耗尽服务器的CPU、内存或数据库连接资源。2025年观察到的CC攻击呈现三个显著特征:协议层面混合化:攻击者不再局限于HTTP Flood,而是结合TCP连接攻击、低频请求攻击等手法。在腾讯云防护的一起游戏行业案例中,黑客针对性地使用了TCP反射(32%)、TCP连接攻击(28%)、TCP四层CC(25%)和HTTP CC(15%)的混合攻击模式。行为模式智能化:现代CC攻击工具能模拟人类操作轨迹,如页面跳转间隔、鼠标移动轨迹等。一起电商攻击事件中,恶意流量甚至模拟了真实用户的“浏览商品-加入购物车-支付失败-重试”完整路径,使传统基于频率的规则失效。资源消耗精准化:攻击者通过前期侦查锁定关键瓶颈接口。某互金平台遭遇的CC攻击专门针对风控查询接口,单次查询需连接6个数据库表,导致仅50QPS的攻击流量就使CPU负载达100%。面对这些挑战,单一防护层已力不从心。华为云“DDoS高防+WAF”联动方案通过分层过滤、协同分析实现了1+1>2的防护效果。联动防护架构解析华为云“DDoS高防+WAF”采用串行流量牵引架构,所有外部流量必须依次经过高防和WAF两层清洗节点,才能到达源站服务器。这种设计并非简单堆叠,而是通过深度集成实现能力互补:Lexical error on line 7. Unrecognized text. ...B --> E[流量型攻击防护• 超大规模流量清洗• IP黑 -----------------------^图1:华为云“DDoS高防+WAF”联动架构。流量依次经过高防层(L3/L4防护)和WAF层(L7防护),形成纵深防御体系。高防节点部署在华为云全球清洗中心,单点防护能力达T级;WAF集群采用分布式检测引擎,支持HTTP/HTTPS深度解析。该架构的核心优势体现在三个层面:容量扩展能力:DDoS高防节点具备T级弹性带宽,可吸收超大流量攻击。当检测到流量超过阈值时,自动触发BGP路由牵引,将流量导流至就近清洗中心。2025年实测数据显示,单节点可抵御最高1.5Tbps的混合攻击。协议解析深度:高防负责处理SYN Flood、UDP反射等网络层攻击,减轻WAF负荷;WAF专注HTTP/S协议解析,支持全流量TLS解密,即使面对加密CC攻击也能有效分析。资源隔离机制:清洗节点与业务资源物理分离,攻击流量在边缘节点终止,仅正常流量回源。在华为云某视频平台客户案例中,即使遭遇800Gbps攻击,源站带宽消耗始终低于50Mbps。部署该架构需注意关键约束:仅支持域名防护,且同一高防IP+端口只能配置一种源站类型。若同时防护api.example.com和www.example.com,需分别配置策略。核心技术:AI驱动的CC防护体系华为云WAF的CC防护核心在于其多层检测引擎,结合规则匹配与AI行为分析,实现从简单到复杂攻击的全覆盖。基础防护规则配置初始防线基于可定制的访问控制规则:# 示例:华为云WAF CC防护规则配置片段 rule_name: anti_cc_rule1 state: enabled detection_mode: frequency_based threshold: interval: 60 # 检测周期(秒) requests: 300 # 请求次数阈值 action: block # 触发动作(阻断) scope: - path: /checkout # 应用路径 - method: POST # HTTP方法 exception_list: - ip: 192.168.1.0/24 # 信任IP段 - user_agent: Googlebot* # 搜索引擎爬虫这类规则需根据业务特性精细化调整:对用户登录接口,设置严格阈值(如5次/分钟)对静态资源目录,适当放宽限制(如60次/秒)对API网关,启用JSON参数解析,检查高频相同参数请求智能防护引擎面对绕过频率检测的低频分布式CC攻击,华为云采用三阶段AI防护模型:AI模型特征提取基于历史攻击样本训练监督学习模型检测异常访问群体无监督聚类识别僵尸网络拓扑图神经网络请求间隔稳定性请求时序特征页面跳转路径/停留时间会话行为特征TLS指纹/HTTP头特征客户端指纹流量采集特征提取AI模型分析决策执行图2:华为云WAF智能CC防护AI模型。通过多维度特征提取和混合模型分析,识别低频CC攻击。监督学习模型使用标注样本训练;无监督聚类发现新型攻击模式;图神经网络分析IP关联性。在某电商平台实战中,该引擎表现出色:攻击开始后 47秒 检测到异常流量1分钟 内自动生成5条防护规则(2条CC规则+3条精准规则)清洗准确率达 99.3%,误杀率仅0.2%关键技术创新流量指纹技术:通过被动式流量分析生成客户端指纹,即使攻击者使用动态IP轮换,也能通过TCP协议栈特征(如初始窗口大小、TSO/GSO配置)关联到同一攻击源。动态策略调整:基于实时流量负载自动切换防护模式:低负载时:启用严格模式,深度分析每个会话高负载时:切换性能优先模式,放宽部分检测降低延迟攻击中:启动紧急模式,激活预训练AI模型协同防护机制:高防与WAF共享威胁情报。当高防检测到某IP发起TCP连接攻击,即使未达到WAF阈值,也会触发WAF提前监控该IP的HTTP行为。实战对抗:大规模攻击案例分析案例1:游戏行业混合攻击防护2025年春节期间,某手游平台遭遇持续DDoS攻击:攻击规模:20天内1300+次攻击,峰值500Gbps攻击手法:TCP反射(32%) + TCP连接攻击(28%) + TCP四层CC(25%)防护团队采用分层对抗策略:攻击流量DDoS高防WAF源站混合攻击流量(TCP反射/连接攻击)启用TCP反射防护算法过滤无效连接(SYN Cookie验证)转发清洗后流量AI检测TCP四层CC流量指纹分析仅放行正常玩家流量攻击流量DDoS高防WAF源站图3:游戏行业混合攻击防护时序。DDoS高防处理网络层攻击(步骤2-3),WAF处理应用层CC攻击(步骤5-6),形成协同防护。关键技术突破点:通过自研TCP反射算法解决防护设备单向检测难题采用AI驱动的四层CC防护,避免客户端SDK改造的漫长时间流量指纹技术精准区分肉鸡与真实玩家攻击平息后,防护系统将生成的规则转化为 “防御资产” 存储,后续类似攻击可瞬时拦截。案例2:低频CC攻击精确阻断2024年某银行信用卡中心遭遇精心设计的低频CC攻击:攻击者使用 10,000+个住宅IP每个IP仅发起 2-3次/分钟 的请求专门针对 OTP短信接口(/api/send_otp)传统基于频率的规则完全失效。华为云WAF启用多维度关联分析:1. 行为分析:正常用户访问路径为: 首页 → 登录页 → 输入手机号 → 触发OTP 攻击流量直接调用OTP接口 2. 设备指纹:攻击流量缺失浏览器典型特征 (如Canvas指纹、WebGL支持) 3. 时间分布:正常请求集中在工作时间(9:00-18:00) 攻击流量24小时均匀分布基于这些特征,AI引擎构建异常度评分模型:异常度 = 0.4×(路径异常权重) + 0.3×(设备指纹权重) + 0.3×(时间分布权重) 当评分 > 0.8 时判定为恶意请求该策略实现 95.7% 的恶意请求拦截率,同时保障正常用户OTP发送成功率99.6%。防护策略优化实践精细化规则配置有效的CC防护需避免“一刀切”策略。推荐分场景优化:业务类型推荐配置异常阈值参考特别注意事项电商支付严格模式+人机验证5次/分钟关注支付失败率波动媒体播放宽松模式+连接速率限制100次/分钟CDN边缘节点特殊放行API网关参数级检测+客户端证书认证基于业务基线动态防范API密钥爆破游戏对战协议合规检查+包长度分析20次/秒匹配游戏心跳包特征监控与持续优化防护系统需持续迭代才能应对新型攻击:核心监控指标:清洗比 = 拦截请求数 / 总请求数(健康值:0.1%-5%)误杀率 = 错误拦截数 / 总拦截数(阈值:<0.5%)攻击响应时间:从攻击开始到规则生效延迟(目标:<1分钟)优化闭环流程:每周分析拦截日志,识别高频误杀URL对误杀接口添加例外规则或调整阈值每月进行攻防演练,测试防护策略有效性每季度更新AI训练样本,保持模型准确性在华为云控制台可通过 “防护效果对比” 功能直观评估策略调整效果:调整前: 正常请求拦截率:0.38% 恶意请求漏过率:12.7% 调整后(优化例外规则): 正常请求拦截率:0.09% ↓ 恶意请求漏过率:10.2% ↓

-

产品设计中的体贴性原则,是指在设计产品时,应充分考虑并满足用户的感受、需求和习惯,使产品能够像人一样体贴用户,给予用户支持和帮助。以下是对产品设计中的体贴性原则的详细阐述:一、定义与重要性体贴性原则强调在设计产品时,要站在用户的角度思考问题,理解用户的喜怒哀乐,进而采取适当的行动来给予支持、帮助或安慰。这种设计原则的重要性在于,它能够增强用户对产品的满意度和忠诚度,提高产品的市场竞争力。二、具体表现关心用户喜好:产品应能够记住用户的行为和偏好,如用户经常访问的网站、常用的功能等,并根据这些信息为用户提供个性化的服务和推荐。通过个性化算法技术手段,降低用户操作的复杂度和选择的迟疑度,让用户感受到平台的推荐内容是为他们私人定制的。恭顺与尊重:产品应尊重用户的选择和决定,不随意判断或限制用户的行为。当用户坚持自己的行为时,产品可以告知用户可能存在的风险,但不应自行限制用户的行为。乐于助人:产品应能够主动提供帮助和支持,如提供使用教程、常见问题解答等。在用户需要帮助时,产品应能够给出超出用户期待的更优方案。具有判断力:产品应能够判断用户的行为是否安全,如检测账户是否在陌生设备或地址上登录,并及时提醒用户。产品还应能够帮助用户保护个人隐私数据,如选择安全的密码、及时报告不当的操作等。预见需求:产品应能够预测用户下一步的需求,并提前做好准备。如在浏览网页时,产品可以预测用户可能点击的链接,并提前下载相关内容,以减少用户的等待时间。尽责与可靠:产品应能够稳定地运行,不出现过多的错误或故障。当产品出现问题时,应能够及时通知用户,并提供解决方案或备选方案。不会增加用户负担:产品应尽可能减少不必要的通知和干扰,如避免过多的弹窗、广告等。产品还应能够自主解决自己的问题,如自动修复错误、自动更新等,以减少用户的操作负担。及时通知:产品应能够及时通知用户所关心的事情,如支付情况、订单状态等。通知应简洁明了,避免过多的冗余信息。自信与稳健:产品应能够坚定自己的信念,不轻易怀疑用户或自己。如在删除文件时,产品可以默认不弹出二次确认框,以提高用户的操作效率。但同时,产品也应提供恢复已删除文件的功能,以防止用户误操作。避免用户犯低级错误:产品应能够通过细致的视觉和文字反馈,提醒用户避免犯低级错误。如在发送信息时,如果用户不小心选择了全部朋友,产品应能够提醒用户并给出确认选项。三、实践应用体贴性原则在产品设计中的应用非常广泛,如浏览器可以记住用户定期登录网站的相关信息,购物网站可以保存用户的收货地址并在下次购物时自动带入,社交媒体可以提醒用户及时查看未读消息等。这些设计都充分考虑了用户的需求和习惯,提高了产品的可用性和用户体验。四、总结与展望体贴性原则是产品设计中的重要原则之一。通过遵循这一原则,设计师可以创造出更加人性化、个性化的产品,提高用户的满意度和忠诚度。未来,随着科技的不断发展和用户需求的不断变化,体贴性原则将在产品设计中发挥更加重要的作用。设计师应不断学习和探索体贴性原则的新方法和新技术,以创造出更加优秀的产品。大家有什么想法可以一起讨论哈~

-

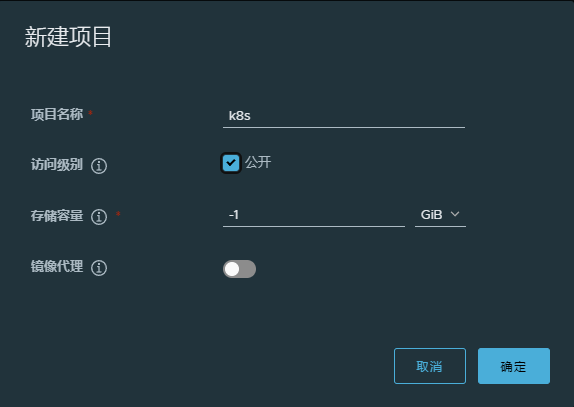

PV静态供给明显的缺点是维护成本太高了! 因此,K8s开始支持PV动态供给,使用StorageClass对象实现。但是查询官方的文档,默认是不支持NFS存储的。这样就需要安装一个插件的方式,使用NFS的PV动态存储。[root@k8s-node2 nfs-client]# vi class.yamlapiVersion: storage.k8s.io/v1kind: StorageClassmetadata: name: managed-nfs-storageprovisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'parameters: archiveOnDelete: "true"[root@k8s-node2 nfs-client]# vi deployment.yamlapiVersion: v1kind: ServiceAccountmetadata: name: nfs-client-provisioner---kind: DeploymentapiVersion: apps/v1metadata: name: nfs-client-provisionerspec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER value: 192.168.0.21//NFS服务器地址 - name: NFS_PATH value: /ifs/kubernetes//NFS目录 volumes: - name: nfs-client-root nfs: server: 192.168.0.21 path: /ifs/kubernetes[root@k8s-node2 nfs-client]# vi rbac.yamlkind: ServiceAccountapiVersion: v1metadata: name: nfs-client-provisioner---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: nfs-client-provisioner-runnerrules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"]---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: run-nfs-client-provisionersubjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: defaultroleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: leader-locking-nfs-client-provisionerrules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"]---kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: leader-locking-nfs-client-provisionersubjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: defaultroleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io直接应用yaml:kubectl apply -f .[root@k8s-node2 ~]# kubectl get podNAME READY STATUS RESTARTS AGEdns-test 1/1 Running 0 83mnfs-client-provisioner-78c97f97c6-ndtsd 1/1 Running 0 11m---apiVersion: v1kind: PersistentVolumemetadata: name: pv-wp labels: name: pv-wpspec: capacity: storage: 10Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain hostPath: path: /wp---apiVersion: apps/v1kind: Deploymentmetadata: labels: app: chartmuseum name: chartmuseum namespace: chartmuseumspec: replicas: 1 selector: matchLabels: app: chartmuseum template: metadata: labels: app: chartmuseum spec: containers: - image: chartmuseum/chartmuseum:latest imagePullPolicy: IfNotPresent name: chartmuseum ports: - containerPort: 8080 protocol: TCP env: - name: DEBUG value: "1" - name: STORAGE value: local - name: STORAGE_LOCAL_ROOTDIR value: /charts volumeMounts: - mountPath: /charts name: charts-volume volumes: - name: charts-volume hostPath: path: /data/charts type: Directory---apiVersion: v1kind: Servicemetadata: name: chartmuseum namespace: chartmuseumspec: ports: - port: 8080 protocol: TCP targetPort: 8080 selector: app: chartmuseum使用k8s-allinone镜像启动云端主机环境后。等待一段时间,集群初始化完毕后,检查k8s集群状态并查看主机名,命令如下:```javascript[root@master ~]# kubectl get nodes,pod -ANAME STATUS ROLES AGE VERSIONnode/master Ready control-plane,master 5m14s v1.22.1NAMESPACE NAME READY STATUS RESTARTS AGEkube-dashboard pod/dashboard-7575cf67b7-5s5hz 1/1 Running 0 4m51skube-dashboard pod/dashboard-agent-69456b7f56-nzghp 1/1 Running 0 4m50skube-system pod/coredns-78fcd69978-klknx 1/1 Running 0 4m58skube-system pod/coredns-78fcd69978-xwzgr 1/1 Running 0 4m58skube-system pod/etcd-master 1/1 Running 0 5m14skube-system pod/kube-apiserver-master 1/1 Running 0 5m11skube-system pod/kube-controller-manager-master 1/1 Running 0 5m13skube-system pod/kube-flannel-ds-9gdnl 1/1 Running 0 4m51skube-system pod/kube-proxy-r7gq9 1/1 Running 0 4m58skube-system pod/kube-scheduler-master 1/1 Running 0 5m11skube-system pod/metrics-server-77564bc84d-tlrp7 1/1 Running 0 4m50s```由上面的执行结果可以看出集群和Pod状态都是正常的。查看当前节点主机名:```javascript[root@master ~]# hostnamectl Static hostname: master Icon name: computer-vm Chassis: vm Machine ID: cc2c86fe566741e6a2ff6d399c5d5daa Boot ID: 94e196b737b6430bac5fbc0af88cbcd1 Virtualization: kvm Operating System: CentOS Linux 7 (Core) CPE OS Name: cpe:/o:centos:centos:7 Kernel: Linux 3.10.0-1160.el7.x86_64 Architecture: x86-64```修改边端节点的主机名,命令如下:```javascript[root@localhost ~]# hostnamectl set-hostname kubeedge-node[root@kubeedge-node ~]# hostnamectl Static hostname: kubeedge-node Icon name: computer-vm Chassis: vm Machine ID: cc2c86fe566741e6a2ff6d399c5d5daa Boot ID: c788c13979e0404eb5afcd9b7bc8fd4b Virtualization: kvm Operating System: CentOS Linux 7 (Core) CPE OS Name: cpe:/o:centos:centos:7 Kernel: Linux 3.10.0-1160.el7.x86_64 Architecture: x86-64```分别配置云端节点和边端节点的主机映射文件,命令如下:```javascript[root@master ~]# cat >> /etc/hosts <<EOF10.26.17.135 master10.26.7.126 kubeedge-nodeEOF[root@kubeedge-node ~]# cat >> /etc/hosts <<EOF10.26.17.135 master10.26.7.126 kubeedge-nodeEOF```(2)云端、边端节点配置Yum源下载安装包kubernetes_kubeedge.tar.gz至云端master节点/root目录,并解压到/opt目录,命令如下:```javascript[root@master ~]# curl -O http://mirrors.douxuedu.com/KubeEdge/kubernetes_kubeedge_allinone.tar.gz[root@master ~]# tar -zxvf kubernetes_kubeedge_allinone.tar.gz -C /opt/[root@master ~]# ls docker-compose-Linux-x86_64 harbor-offline-installer-v2.5.0.tgz kubeedge kubernetes_kubeedge.tar.gzec-dashboard-sa.yaml k8simage kubeedge-counter-demo yum```在云端master节点配置yum源,命令如下:```javascript[root@master ~]# mv /etc/yum.repos.d/* /media/[root@master ~]# cat > /etc/yum.repos.d/local.repo <<EOF[docker]name=dockerbaseurl=file:///opt/yumgpgcheck=0enabled=1EOF[root@master ~]# yum -y install vsftpd[root@master ~]# echo anon_root=/opt >> /etc/vsftpd/vsftpd.conf```开启服务,并设置开机自启:```javascript[root@master ~]# systemctl enable vsftpd --now```边端kubeedge-node配置yum源,命令如下:```javascript[root@kubeedge-node ~]# mv /etc/yum.repos.d/* /media/[root@kubeedge-node ~]# cat >/etc/yum.repos.d/ftp.repo <<EOF[docker]name=dockerbaseurl=ftp://master/yumgpgcheck=0enabled=1EOF```(3)云端、边端配置Docker云端master节点已经安装好了Docker服务,需要配置本地镜像拉取,命令如下:```javascript[root@master ~]# vi /etc/docker/daemon.json { "log-driver": "json-file", "log-opts": { "max-size": "200m", "max-file": "5" }, "default-ulimits": { "nofile": { "Name": "nofile", "Hard": 655360, "Soft": 655360 }, "nproc": { "Name": "nproc", "Hard": 655360, "Soft": 655360 } }, "live-restore": true, "oom-score-adjust": -1000, "max-concurrent-downloads": 10, "max-concurrent-uploads": 10, "insecure-registries": ["0.0.0.0/0"]}[root@master ~]# systemctl daemon-reload [root@master ~]# systemctl restart docker```边端节点安装Docker,并配置本地镜像拉取,命令如下:```javascript[root@kubeedge-node ~]# yum -y install docker-ce[root@kubeedge-node ~]# vi /etc/docker/daemon.json{ "log-driver": "json-file", "log-opts": { "max-size": "200m", "max-file": "5" }, "default-ulimits": { "nofile": { "Name": "nofile", "Hard": 655360, "Soft": 655360 }, "nproc": { "Name": "nproc", "Hard": 655360, "Soft": 655360 } }, "live-restore": true, "oom-score-adjust": -1000, "max-concurrent-downloads": 10, "max-concurrent-uploads": 10, "insecure-registries": ["0.0.0.0/0"]}[root@kubeedge-node ~]# systemctl daemon-reload [root@kubeedge-node ~]# systemctl enable docker --now```(4)云端节点部署Harbor仓库在云端master节点上部署Harbor本地镜像仓库,命令如下:```javascript[root@master ~]# cd /opt/[root@master opt]# mv docker-compose-Linux-x86_64 /usr/bin/docker-compose[root@master opt]# tar -zxvf harbor-offline-installer-v2.5.0.tgz [root@master opt]# cd harbor && cp harbor.yml.tmpl harbor.yml[root@master harbor]# vi harbor.ymlhostname: 10.26.17.135 #将hostname修改为云端节点IP[root@master harbor]# ./install.sh……✔ ----Harbor has been installed and started successfully.----[root@master harbor]# docker login -u admin -p Harbor12345 master….Login Succeeded```打开浏览器使用云端master节点IP,访问Harbor页面,使用默认的用户名和密码进行登录(admin/Harbor12345),并创建一个名为“k8s”的命名空间,如下图所示:图2-1 创建k8s项目加载本地镜像并上传至Harbor镜像仓库,命令如下:```javascript[root@master harbor]# cd /opt/k8simage/ && sh load.sh[root@master k8simage]# sh push.sh 请输入您的Harbor仓库地址(不需要带http):10.26.17.135 #地址为云端master节点地址```(5)配置节点亲和性在云端节点分别配置flannel pod和proxy pod的亲和性,命令如下:```javascript[root@master k8simage]# kubectl edit daemonset -n kube-system kube-flannel-ds......spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux - key: node-role.kubernetes.io/edge #在containers标签前面增加配置 operator: DoesNotExist[root@master k8simage]# kubectl edit daemonset -n kube-system kube-proxyspec: affinity: #在containers标签前面增加配置 nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: node-role.kubernetes.io/edge operator: DoesNotExist[root@master k8simage]# kubectl get pod -n kube-systemNAME READY STATUS RESTARTS AGEkube-flannel-ds-q7mfq 1/1 Running 0 13mkube-proxy-wxhkm 1/1 Running 0 39s```可以查看到两个pod被修改完成之后重新运行,状态为Running。(6)Kubeedge云端环境搭建在云端master节点配置云端所需要的软件包,及服务配置文件,命令如下:```javascript[root@master k8simage]# cd /opt/kubeedge/[root@master kubeedge]# mv keadm /usr/bin/[root@master kubeedge]# mkdir /etc/kubeedge[root@master kubeedge]# tar -zxf kubeedge-1.11.1.tar.gz[root@master kubeedge]# cp -rf kubeedge-1.11.1/build/tools/* /etc/kubeedge/[root@master kubeedge]# cp -rf kubeedge-1.11.1/build/crds/ /etc/kubeedge/[root@master kubeedge]# tar -zxf kubeedge-v1.11.1-linux-amd64.tar.gz[root@master kubeedge]# cp -rf * /etc/kubeedge/```启动云端服务,命令如下:```javascript[root@master kubeedge]# cd /etc/kubeedge/[root@master kubeedge]# keadm deprecated init --kubeedge-version=1.11.1 --advertise-address=10.26.17.135 ……KubeEdge cloudcore is running, For logs visit: /var/log/kubeedge/cloudcore.logCloudCore started```● -kubeedge-version=:指定Kubeedge的版本,离线安装必须指定,否则会自动下载最新版本。● -advertise-address=:暴露IP,此处填写keadm所在的节点内网IP。如果要与本地集群对接的话,则填写公网IP。此处因为云上,所以只需要写内网IP。检查云端服务,命令如下:```javascript[root@master kubeedge]# netstat -ntpl |grep cloudcoretcp6 0 0 :::10000 :::* LISTEN 974/cloudcore tcp6 0 0 :::10002 :::* LISTEN 974/cloudcore ```(7)Kubeedge边缘端环境搭建在边缘端kubeedge-node节点复制云端软件包至本地,命令如下:```javascript[root@kubeedge-node ~]# scp root@master:/usr/bin/keadm /usr/local/bin/[root@kubeedge-node ~]# mkdir /etc/kubeedge[root@kubeedge-node ~]# cd /etc/kubeedge/[root@kubeedge-node kubeedge]# scp -r root@master:/etc/kubeedge/* /etc/kubeedge/```在云端master节点查询密钥,命令如下,复制的token值需要删掉换行符:```javascript[root@master kubeedge]# keadm gettoken1f0f213568007af1011199f65ca6405811573e44061c903d0f24c7c0379a5f65.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2OTEwNTc2ODN9.48eiBKuwwL8bFyQcfYyicnFSogra0Eh0IpyaRMg5NvY```在边端kubeedge-node使用命令加入集群,命令如下:```javascript[root@kubeedge-node ~]# keadm deprecated join --cloudcore-ipport=10.26.17.135:10000 --kubeedge-version=1.11.1 --token=1f0f213568007af1011199f65ca6405811573e44061c903d0f24c7c0379a5f65.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2OTEwNTc2ODN9.48eiBKuwwL8bFyQcfYyicnFSogra0Eh0IpyaRMg5NvYinstall MQTT service successfully.......[Run as service] service file already exisits in /etc/kubeedge//edgecore.service, skip downloadkubeedge-v1.11.1-linux-amd64/kubeedge-v1.11.1-linux-amd64/edge/kubeedge-v1.11.1-linux-amd64/edge/edgecorekubeedge-v1.11.1-linux-amd64/versionkubeedge-v1.11.1-linux-amd64/cloud/kubeedge-v1.11.1-linux-amd64/cloud/csidriver/kubeedge-v1.11.1-linux-amd64/cloud/csidriver/csidriverkubeedge-v1.11.1-linux-amd64/cloud/iptablesmanager/kubeedge-v1.11.1-linux-amd64/cloud/iptablesmanager/iptablesmanagerkubeedge-v1.11.1-linux-amd64/cloud/cloudcore/kubeedge-v1.11.1-linux-amd64/cloud/cloudcore/cloudcorekubeedge-v1.11.1-linux-amd64/cloud/controllermanager/kubeedge-v1.11.1-linux-amd64/cloud/controllermanager/controllermanagerkubeedge-v1.11.1-linux-amd64/cloud/admission/kubeedge-v1.11.1-linux-amd64/cloud/admission/admissionKubeEdge edgecore is running, For logs visit: journalctl -u edgecore.service -xe```如若提示yum报错,可删除多余yum源文件,重新执行加入集群命令:```javascript[root@kubeedge-node kubeedge]# rm -rf /etc/yum.repos.d/epel*```查看状态服务是否为active:```javascript[root@kubeedge-node kubeedge]# systemctl status edgecore● edgecore.service Loaded: loaded (/etc/systemd/system/edgecore.service; enabled; vendor preset: disabled) Active: active (running) since Thu 2023-08-03 06:05:39 UTC; 15s ago Main PID: 8405 (edgecore) Tasks: 15 Memory: 34.3M CGroup: /system.slice/edgecore.service └─8405 /usr/local/bin/edgecore ```在云端master节点检查边缘端节点是否正常加入,命令如下:```javascript[root@master kubeedge]# kubectl get nodesNAME STATUS ROLES AGE VERSIONkubeedge-node Ready agent,edge 5m19s v1.22.6-kubeedge-v1.11.1master Ready control-plane,master 176m v1.22.1```若节点数量显示为两台,且状态为Ready,则证明节点加入成功。(8)云端节点部署监控服务在云端master节点配置证书,命令如下:```javascript[root@master kubeedge]# export CLOUDCOREIPS="10.26.17.135"```此处IP填写为云端master节点IP:```javascript[root@master kubeedge]# cd /etc/kubeedge/[root@master kubeedge]# ./certgen.sh stream```更新云端配置,使监控数据可以传送至云端master节点,命令如下:```javascript[root@master kubeedge]# vi /etc/kubeedge/config/cloudcore.yaml cloudStream: enable: true #修改为true streamPort: 10003router: address: 0.0.0.0 enable: true #修改为true port: 9443 restTimeout: 60```更新边缘端的配置,命令如下:```javascript[root@kubeedge-node kubeedge]# vi /etc/kubeedge/config/edgecore.yaml edgeStream: enable: true #修改为true handshakeTimeout: 30serviceBus: enable: true #修改为true```重新启动云端服务,命令如下:```javascript[root@master kubeedge]# kill -9 $(netstat -lntup |grep cloudcore |awk 'NR==1 {print $7}' |cut -d '/' -f 1)[root@master kubeedge]# cp -rfv cloudcore.service /usr/lib/systemd/system/[root@master kubeedge]# systemctl start cloudcore.service [root@master kubeedge]# netstat -lntup |grep 10003tcp6 0 0 :::10003 :::* LISTEN 15089/cloudcore```通过netstat -lntup |grep 10003查看端口,如果出现10003端口则表示成功开启cloudStream。重新启动边缘端服务,命令如下:```javascript[root@kubeedge-node kubeedge]# systemctl restart edgecore.service```在云端部署服务并查看收集指标,命令如下:```javascript[root@master kubeedge]# kubectl top nodesNAME CPU(cores) CPU% MEMORY(bytes) MEMORY% kubeedge-node 24m 0% 789Mi 6% master 278m 3% 8535Mi 54% ```服务部署后,需等待一段时间才能查看到kubeedge-node的资源使用情况,这时因为数据还未同步至云端节点。#### 2.1.2 安装依赖包首先安装gcc编译器,gcc有些系统版本已经默认安装,通过gcc -version查看,没安装的先安装gcc,不要缺少,否则有可能安装python出错,python3.7.0以下的版本可不装libffi-devel。在云端节点,下载离线yum源,安装软件,命令如下:```javascript[root@master ~]# curl -O http://mirrors.douxuedu.com/KubeEdge/python-kubeedge/gcc-repo.tar.gz[root@master ~]# tar -zxvf gcc-repo.tar.gz [root@master ~]# vi /etc/yum.repos.d/gcc.repo [gcc]name=gccbaseurl=file:///root/gcc-repogpgcheck=0enabled=1[root@master ~]# yum -y install zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gdbm-devel db4-devel libpcap-devel xz-devel libffi-devel gcc```#### 2.1.3 编译安装Python3.7在云端节点下载python3.7等安装包,并进行解压编译,命令如下:```javascript[root@master ~]# curl -O http://mirrors.douxuedu.com/KubeEdge/python-kubeedge/Python-3.7.3.tar.gz[root@master ~]# curl -O http://mirrors.douxuedu.com/KubeEdge/python-kubeedge/volume_packages.tar.gz[root@master ~]# mkdir /usr/local/python3 && tar -zxvf Python-3.7.3.tar.gz [root@master ~]# cd Python-3.7.3/[root@master Python-3.7.3]# ./configure --prefix=/usr/local/python3[root@master Python-3.7.3]# make && make install[root@master Python-3.7.3]# cd /root```#### 2.1.4 建立Python软链接解压volume_packages压缩包,然后将编译后的python3.7软连接至/usr/bin目录下,并查看版本信息,命令如下:```javascript[root@master ~]# tar -zxvf volume_packages.tar.gz [root@master ~]# yes |mv volume_packages/site-packages/* /usr/local/python3/lib/python3.7/site-packages/[root@master ~]# ln -s /usr/local/python3/bin/python3.7 /usr/bin/python3[root@master ~]# ln -s /usr/local/python3/bin/pip3.7 /usr/bin/pip3[root@master ~]# python3 --versionPython 3.7.3[root@master ~]# pip3 listPackage Version ------------------------ --------------------absl-py 1.4.0 aiohttp 3.8.4 aiosignal 1.3.1 anyio 3.7.0 async-timeout 4.0.2 asynctest 0.13.0 ......以下内容忽略......```### 2.2 搭建MongoDB#### 2.2.1 搭建MongoDB将mongoRepo.tar.gz软件包放到边侧节点中,然后进行解压,命令如下:```javascript[root@kubeedge-node ~]# curl -O http://mirrors.douxuedu.com/KubeEdge/python-kubeedge/mongoRepo.tar.gz[root@kubeedge-node ~]# tar -zxvf mongoRepo.tar.gz -C /opt/[root@kubeedge-node ~]# vi /etc/yum.repos.d/mongo.repo[mongo]name=mongoenabled=1gpgcheck=0baseurl=file:///opt/mongoRepo```在边侧节点安装mongodb,命令如下:```javascript[root@kubeedge-node ~]# yum -y install mongodb*```安装完成后配置mongo,命令如下:```javascript[root@kubeedge-node ~]# vi /etc/mongod.conf #找到下面的字段然后进行修改net: port: 27017 bindIp: 0.0.0.0 #修改为0.0.0.0```修改完毕后,重启服务,命令如下:```javascript[root@kubeedge-node ~]# systemctl restart mongod && systemctl enable mongod```验证服务,命令如下:```javascript[root@kubeedge-node ~]# netstat -lntup |grep 27017tcp 0 0 0.0.0.0:27017 0.0.0.0:* LISTEN 10195/mongod ```若出现27017端口,则MongoDB服务启动成功。#### 2.2.2 创建数据库边侧节点登录MongoDB,创建数据库与集合,命令如下:```javascript[root@kubeedge-node ~]# mongo> show dbsadmin 0.000GBconfig 0.000GBlocal 0.000GB> use edgesqlswitched to db edgesql> show collections> db.createCollection("users"){ "ok" : 1 }> db.createCollection("ai_data"){ "ok" : 1 }> db.createCollection("ai_model"){ "ok" : 1 }> show collectionsai_dataai_modelusers>#按键盘上的Ctrl+D可退出```### 2.3 搭建H5前端ydy_cloudapp_front_dist是编译后的前端H5程序,通过Web Server运行即可。#### 2.3.1 Linux运行H5前端在边侧节点下载gcc-repo和ydy_cloudapp_front_dist压缩包并进行解压,配置Yum源并将解压后的文件拷贝至Nginx站点目录,命令如下:```javascript[root@kubeedge-node ~]# curl -O http://mirrors.douxuedu.com/KubeEdge/python-kubeedge/gcc-repo.tar.gz[root@kubeedge-node ~]# curl -O http://mirrors.douxuedu.com/KubeEdge/python-kubeedge/ydy_cloudapp_front_dist.tar.gz[root@kubeedge-node ~]# tar -zxvf gcc-repo.tar.gz [root@kubeedge-node ~]# tar -zxvf ydy_cloudapp_front_dist.tar.gz [root@kubeedge-node ~]# vi /etc/yum.repos.d/gcc.repo[gcc]name=gccbaseurl=file:///root/gcc-repogpgcheck=0enabled=1[root@kubeedge-node ~]# yum install -y nginx[root@kubeedge-node ~]# rm -rf /usr/share/nginx/html/*[root@kubeedge-node ~]# mv ydy_cloudapp_front_dist/index.html /usr/share/nginx/html/[root@kubeedge-node ~]# mv ydy_cloudapp_front_dist/static/ /usr/share/nginx/html/[root@kubeedge-node ~]# vi /etc/nginx/nginx.conf#配置nginx反向代理,进入配置文件在文件下方找到相应的位置进行配置server { listen 80; listen [::]:80; server_name localhost; root /usr/share/nginx/html; # Load configuration files for the default server block. include /etc/nginx/default.d/*.conf; error_page 404 /404.html; location = /404.html { } location ~ ^/cloudedge/(.*) { proxy_pass http://10.26.17.135:30850/cloudedge/$1; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; add_header 'Access-Control-Allow-Origin' '*'; add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS'; add_header 'Access-Control-Allow-Headers' 'Authorization, Content-Type'; add_header 'Access-Control-Allow-Credentials' 'true'; } error_page 500 502 503 504 /50x.html; location = /50x.html { } }[root@kubeedge-node ~]# nginx -tnginx: the configuration file /etc/nginx/nginx.conf syntax is oknginx: configuration file /etc/nginx/nginx.conf test is successful[root@kubeedge-node ~]# systemctl restart nginx && systemctl enable nginx```

-

MES数据追溯常遇问题及解决方法: 在实际数字化工厂MES应用过程,由于设计或使用不当,数据追溯过程中也可能会存在诸多问题,常遇问题包括: 1. 数据质量问题 可能存在数据录入错误、数据缺失或不完整等情况,导致追溯结果的准确性受到影响。解决这个问题的关键是加强数据采集和录入的质量管理,例如通过自动化数据采集、数据验证机制和培训员工等方式来减少错误。 2. 数据追溯复杂度 在大规模生产环境中,涉及到的数据量庞大,追踪产品的整个生命周期可能需要反向追溯多个环节。为了简化追溯过程,可以对数据进行有效的分类和标识,建立起严密的数据关联关系,并利用先进的数据分析技术来优化追溯路径。 3. 系统集成困难 企业往往拥有多个独立的系统(如ERP、PLM等),这些系统之间的数据流通和互操作可能存在障碍,使得MES数据追溯变得复杂。为了解决这个问题,可以采用标准化的接口和协议,实现各个系统之间的无缝衔接,确保数据能够顺畅流通。 4. 安全与隐私保护 MES数据追溯涉及到大量的敏感信息,包括产品设计、生产参数、原材料供应商等。确保数据的安全性和隐私保护是一个重要的挑战。优化措施包括加密数据传输、权限管理、审计跟踪等,以保护数据的机密性和完整性。 为了优化MES数据追溯,以下是一些常见的措施: 1. 自动化数据采集 采用自动化手段收集数据,减少人工录入错误,提高数据质量和准确性。 2. 数据标准化与分类 建立统一的数据标准和分类体系,对数据进行规范化处理,便于后续的查询和追溯。 3. 数据分析与挖掘 利用数据分析技术,对追溯数据进行挖掘和分析,发现潜在问题和改进点,优化生产流程和质量控制。 4. 强化系统集成能力 通过采用标准化接口和协议,实现不同系统之间的无缝集成,确保数据的流通和一致性。 5. 安全与隐私保护 加强数据安全管理,采用加密传输、身份认证、权限控制等措施,保护数据的隐私和机密性。 6. 培训和意识培养 加强员工的培训和意识培养,提高他们对MES数据追溯的重要性和正确操作方法的认识,确保数据的准确性和可靠性。 通过这些优化措施,可以提高MES数据追溯的效率和精确性,帮助企业更好地管理生产过程和提升产品质量。 以上就是关于万界星空科技云MES制造执行系统中关于追溯功能的相关介绍。 如果你有相关需求,欢迎私信或者百度搜索万界星空科技官网与我们联系。

-

项目名称】:人民xxx出版社有限公司上云迁移项目【交付地点】:北京【客户需求】:本项目主要任务将在腾讯云上的项目迁移至华为云,涉及Nginx、小程序Web服务、license服务、rabbitmq服务、logstash日志服务的迁移。ECS 10台、MariaDB数据库2台,ES日志服务3节点、redis 集群1个,MongoDB数据库3节点, 数据库迁移数据量约 213GB,对象文件约323G。【迁移要求】:1、迁移方式:可停机迁移,迁移过程中不影响原服务对外提供业务,业务割接时,保证数据不丢失。2、迁移时间:在两周内完成迁移任务,时间比较紧迫。【进展情况】:分类关键任务描述计划完成时间实际完成时间业务调研调研客户业务架构图和网络架构图7月18日7月18日迁移方案设计迁移实施方案输出7月19日7月19日资源发放&业务部署在华为端下发ECS/CCE/MariaDB/DDS/Redis/CSS/OBS/WAF/ELB7月20日7月20日压力&性能测试使用华为云CPTS工具对小程序,web服务,PC端管理后台,中间件服务等系统做压力性能测试7月21日7月21日MariaDB迁移使用MySql Dump把源端的数据下载到本地,再通过专线渠道迁移到华为侧的MariaDB集群7月24日5月24日业务验证MariaDB,OBS,DDS数据一致性对比,并也业务系统联调测试7月26日7月26日割接演练小程序,web服务,PC端管理后台,中间件服务等业务系统割接演练7月28日7月28日业务割接健康知识进万家业务系统正式割接,停止源端业务,数据做最后一次增量同步,并做数据一致性校验,将业务流量切至华为云7月29日7月29日运行保障在CES中添加ECS、ELB、Redis,MariaDB等云服务监控,并做巡检8月11日/【过程问题】:问题描述解决方法提单人责任人提单时间解决时间华为云ECS绑定弹性EIP后,使用VPN连接后内网不通;VPC是加载到一个云连接里面的,指向云连接的路由是0.0.0.0/0,EIP的路由优先级比0.0.0.0/0的路由优先级更高,因此回包走EIP出去了,所以不通。在主账号云连接实例的VPC配置的CIDR里面增加云下的VPN网段客户我方7月20日7月21日华为云主子账户切换关联问题取消后重新关联客户我方7月21日7月21日Codearts创建项目时权限不足问题在主账号的租户设置>需求管理 > 设置项目创建者,设置相应的权限客户我方7月21日7月21日短信服务签名选择涉及第三方权益时,无法提供授权书在申请签名时涉及第三方权限选择否,上传营业执照客户我方7月21日7月21日MariaDB的percona5.7版本与MySQL5.7兼容性的问题后端核实是兼容的客户我方7月24日7月24日使用OMS迁移OBS数据时迁移失败GET_SRC_OBJ_me ta_FAILURE获取源端对象元数据失败,因为法律原因无法使用,被封禁图片,不进行迁移客户我方7月24日7月24日Codearts的pipeline流水线不支持openeuler的系统更换成Euleros系统客户我方7月25日7月25日迁移任务暂停后,目标实例只读状态结束迁移任务,结束任务后才能恢复成读写状态客户我方7月25日7月25日redis集群模式是否有代理服务推荐华为云GaussDB(for Redis)客户我方7月25日7月25日Redis修改multi-db参数时报错,需要清空数据才能修改,控制台执行清空后,还是有报错信息尝试用命令flushall进行清空,重启后可以正常修改客户我方7月26日7月26日RDS的备份恢复时只能选择恢复到新实例,不能恢复到本实例由于是本地SSD的原因,恢复到新实例后再进行数据同步到原实例客户我方7月28日7月28日

-

元宇宙作为人工智能、云计算和数字孪生等前沿技术的结合体,近年来越发受到各大企业重视。元宇宙的应用场景层出不穷,不仅包括营销推广场景,还有品牌活动和电商销售,能有效提升品宣和商业转化效果。元宇宙也具有极大的建设价值,从品牌文化展示到3D场景化联动再到互动社群运营,参与元宇宙建设可以占据元宇宙的发展潮流,更好地赋能品牌营销。01 实时云渲染解决元宇宙存在的疑难杂症随着元宇宙的发展,在过去几年的研发和运营过程中,3DCAT发现传统元宇宙场景在实际使用中存在一些困难,如用户硬件性能门槛高、内容碎片化、数据安全保障差、APP分发成本高、传播效率低且高成本、上云时间长以及缺乏标准化等。随着5G技术和云计算的快速发展,实时云渲染技术提升到了一个全新的高度,成为了元宇宙行业的核心支撑技术之一,也成为了元宇宙场景规模化使用和传播的有效技术路径,助力企业实现商业化闭环。为解决元宇宙行业存在的疑难杂症,瑞云科技旗下的3DCAT元宇宙实时渲染云全新推出了「3DCAT元宇宙全域合作解决方案」。02 3DCAT实时云渲染让元宇宙无处不在3DCAT基于云计算理念,将三维应用部署在云端运行,云端资源进行图形数据的实时计算和输出,并把运行结果“流”(Streaming)的方式推送到终端呈现,终端用户可随时随地交互式访问各种三维应用。3DCAT在SaaS、PaaS和IaaS三层面提供一站式云渲染能力,覆盖多类型场景,为元宇宙产业赋能。3DCAT的六大优势:高性价比、批量采购、分时复用、独享性能、定制化方案和企业级安全保障,给您的元宇宙解决方案提供保障。「轻终端,重云端」云渲染将更多的图形计算能力、存储需求移至云端,使得终端的图形计算能力和存储配置要求大大降低,为终端交互设备轻便化和移动化提供了基础 SaaS 级支持。03 3DCAT提供高性价比价格和优质服务除了优质的云计算云渲染服务外,3DCAT还有着高性价比价格和全面7*24小时的服务能力,为您的元宇宙解决方案保驾护航。04 3DCAT元宇宙合作案例&服务作为元宇宙实时云渲染行业的先行者,3DCAT已在汽等领域提供专业的实时云渲染服务,为不同行业迈入元宇宙做后盾。1 奥迪官网展示3DCAT与一汽奥迪深度合作,为一汽奥迪官网线上个性化订车提供云渲染展示解决方案,让消费者足不出户便可亲临4S店现场。2 广州非遗街区(元宇宙)广州非遗街区(元宇宙)搭建以北京路骑楼为原型的虚拟公共文化空间,通过3DCAT实时渲染云端图形渲染算力和高度自研的网络串流技术,将计算结果实时推送到用户终端。让人们可以足不出户沉浸式赏非遗、玩游戏、品美食、逛展览、看展演。3 2022广东国际旅游产业博览会元宇宙本届旅博会结合5G、VR(虚拟现实)、裸眼3D、人工智能等技术呈现展览展示效果,实现线上3D虚拟漫游与360°全景相结合,主要设置云展示中心功能,实现云体验、云展览、云推介等一站式云服务,为参展商打造高效商务对接通路,为公众提供多样便民惠民服务。本文《重塑元宇宙体验!3DCAT元宇宙实时云渲染解决方案来了》内容由3DCAT实时云渲染解决方案提供商整理发布,如需转载,请注明出处及链接。

-

应如何基于DME引擎搭建自己所需的企业资产管理系统呢?

-

-

日前,华为云联合赞奇科技联合发布了云设计协同解决方案,为设计行业提供更为灵活高效的全新生产模式,全面释放数字化办公生产力。赞奇科技董事长梅向东、赞奇科技总经理金伟、华为云中国区总裁洪方明、华为云基础服务领域总裁高江海、华为云Marketing与生态总裁陈亮、华为云与计算江苏业务部总经理王震、华为云桌面云解决方案总经理张德等与会并共同出席发布仪式。 华为云与赞奇科技联合发布云设计协同解决方案 传统设计办公场景下,存在办公场所固定、业务协同效率低、硬件采购成本高以及软硬件换代速度快等挑战,而应对这些挑战不仅需要丰富的行业经验,还需结合扎实的技术功底,这也是双方联手合作的初衷。 赞奇科技成立于2010年,致力于三维视觉计算云技术研发与应用,目前完全自主研发并运营云渲染平台,为影视、动漫、建筑、室内室外、游戏、工业设计等用户提供云渲染服务以及其他相关的云PaaS、SaaS服务。 华为云提供高性能、高弹性的基础服务,以及立体完整的安全服务和合规体系,为联合解决方案提供了坚实的技术底座。 云设计协同解决方案具备如下优势: 办公模式更灵活:设计师可随时随地远程接入云桌面,不再受到物理设备及办公地点的约束,满足anytime、anywhere的移动办公诉求; 业务协同更高效:华为云共享文件存储可弹性扩展,IOPS性能随容量线性增长,并且解决多方协同数据不一致问题,轻松支持协同制作; 即开即用,按需付费:支持灵活的付费模式,有效降低专业软硬件高额的固定资产投资成本,更贴合设计行业灵活弹性的业务特点; 快速获取顶尖能力:只需几个步骤即可获取专业级显卡及顶尖CPU的算力,解决本地电脑配置不足导致渲染效率低的问题,大幅提高生产力。 华为云中国区总裁洪方明表示:“华为云携手赞奇科技,通过云服务的模式带来稳定、安全、可靠的面向各类设计场景的全新解决方案,相信将成为设计行业数字化转型时期全新的生产力工具。华为云将持续赋能应用,使能数据,做智能世界的黑土地,携手赞奇等拥有丰富行业积累的伙伴,共同为设计行业的数字化转型持续贡献价值。” 赞奇科技董事长梅向东表示:“今年由于疫情原因,数字化进程已经大大加速,云会议、云办公、云协同等云上应用如雨后春笋般爆炸式地增长。面对这样的风口和机遇,我们希望拥抱变化、拥抱机遇,用创新理念和技术来推动时代的发展和进步,联同华为及行业合作伙伴为客户提供更加与时俱进的产品,共同开创行业数字化新时代。”文章转载来源:it168

推荐直播

-

HDC深度解读系列 - Serverless与MCP融合创新,构建AI应用全新智能中枢

HDC深度解读系列 - Serverless与MCP融合创新,构建AI应用全新智能中枢2025/08/20 周三 16:30-18:00

张昆鹏 HCDG北京核心组代表

HDC2025期间,华为云展示了Serverless与MCP融合创新的解决方案,本期访谈直播,由华为云开发者专家(HCDE)兼华为云开发者社区组织HCDG北京核心组代表张鹏先生主持,华为云PaaS服务产品部 Serverless总监Ewen为大家深度解读华为云Serverless与MCP如何融合构建AI应用全新智能中枢

回顾中 -

关于RISC-V生态发展的思考

关于RISC-V生态发展的思考2025/09/02 周二 17:00-18:00

中国科学院计算技术研究所副所长包云岗教授

中科院包云岗老师将在本次直播中,探讨处理器生态的关键要素及其联系,分享过去几年推动RISC-V生态建设实践过程中的经验与教训。

回顾中 -

一键搞定华为云万级资源,3步轻松管理企业成本

一键搞定华为云万级资源,3步轻松管理企业成本2025/09/09 周二 15:00-16:00

阿言 华为云交易产品经理

本直播重点介绍如何一键续费万级资源,3步轻松管理成本,帮助提升日常管理效率!

回顾中

热门标签